ABO (Ad Set Budget Optimization) allocates fixed budgets to individual ad sets, giving you complete spend control but requiring hands-on management and high creative hit rates to be cost-effective. CBO (Campaign Budget Optimization), also known as Advantage+ campaign budget, lets Meta distribute budget across ad sets based on predicted performance. Many advertisers benefit from CBO with minimum ad set spend modifications. Elite media buyers use both: ABO for controlled testing, then scale winners into CBO campaigns using post ID duplication.

The ABO vs CBO debate has been raging for years, and most of the advice you'll find online misses a critical nuance. Advertisers typically pick one strategy and stick with it, or they flip-flop without a clear framework for when each approach makes sense.

Here's the uncomfortable truth most content glosses over: ABO is an elite operator's strategy. It works beautifully when your creative hit rate is high and you have time for hands-on management. But if your hit rate isn't stellar, your ABO losses compound fast and your scaling campaigns have to cover those losses.

This guide cuts through the noise with a practitioner-driven framework. You'll learn when ABO actually makes sense (hint: it's more specific than "when you want control"), why CBO with minimum spend is the happy medium for most advertisers, and how top media buyers use both strategies together for testing and scaling.

What Is ABO (Ad Set Budget Optimization)?

ABO means setting your budget at the ad set level rather than the campaign level. Each ad set gets exactly what you allocate, regardless of performance.

If you create a campaign with five ad sets and give each one $50/day, each ad set spends $50/day. The winner doesn't get more. The loser doesn't get cut. Every ad set gets its full allocation.

How it works in practice:

- Budget is fixed per ad set

- Each ad set spends its allocation regardless of performance

- You control exactly where money goes

- Meta still optimizes delivery within each ad set, but can't shift budget between them

Naming note: Unlike CBO, which is also known as "Advantage+ campaign budget," ABO is still called Ad Set Budget Optimization in Ads Manager. When you toggle off Advantage+ campaign budget at the campaign level, you're using ABO.

The key characteristic of ABO is forced spend distribution. If you want every creative concept or audience to get equal testing budget, ABO guarantees that happens. CBO cannot make this guarantee.

What Is CBO (Campaign Budget Optimization)?

CBO, also known as Advantage+ campaign budget in Ads Manager, sets your budget at the campaign level. Meta's algorithm decides how to distribute that budget across your ad sets based on predicted performance.

If you create a campaign with five ad sets and a $250/day campaign budget, Meta might spend $150 on Ad Set A, $60 on Ad Set B, $30 on Ad Set C, and almost nothing on D and E. The algorithm chases what it predicts will perform best.

How it works in practice:

- Budget is set at campaign level

- Meta distributes spend based on predicted results

- High performers get more budget automatically

- Low performers get starved of spend

The Pareto effect is real. In CBO campaigns, you'll often see 20% of your ad sets getting 80% of the spend. One case study showed a CBO campaign spending $90 on one ad set and $10 on another on Day 1, essentially giving no fair read to the second ad set.

This is by design. CBO optimizes for efficiency by finding what works and doubling down. It's not trying to test everything equally.

ABO vs CBO: Key Differences at a Glance

| Factor | ABO | CBO (Advantage+ campaign budget) |

|---|

| Budget Level | Ad Set | Campaign |

| Control | Full manual control | Algorithm-driven |

| Spend Distribution | Equal across ad sets | Performance-based (often 80/20) |

| Management Required | High (hands-on daily) | Lower (check weekly) |

| Best For | Testing with high hit rate | Scaling proven winners |

| Risk Profile | Losses compound if hit rate is low | May starve promising ad sets prematurely |

| Learning Phase | Each ad set learns independently | Can concentrate budget to exit learning faster |

| Scaling | Manual budget increases (risk learning reset) | Can increase campaign budget freely |

The fundamental tradeoff: ABO gives you control at the cost of efficiency. CBO gives you efficiency at the cost of control.

Neither is inherently better. As consultant Levi Steede puts it: "Most advertisers treat ABO vs CBO like picking a favorite sports team. It's not about which one is 'better.' It's about using the right tool for the right situation."

When ABO Actually Makes Sense

ABO isn't for everyone. It's specifically for advertisers who meet certain criteria. Let's be honest about what those are.

You Have a High Hit Rate

This is the most important factor, and most content ignores it entirely.

Hit rate means the percentage of your ads that work. If you launch 10 ads and 7-8 of them hit your target metrics, you have a high hit rate. If only 2-3 work, you have a low hit rate.

ABO works best when your hit rate is already high. Why? Because ABO spends equally on everything. If you have 10 ad sets and 7 are winners, you're getting efficient spend on 70% of your budget. But if only 2 are winners, you're wasting 80% of your budget on losers that CBO would have cut automatically.

As Levi Steede explains: "ABO lets you push spend directly into specific winners... but if you're not confident in your hit rate yet, use CBO."

The compounding problem: If your hit rate is low, ABO losses add up fast. Every underperforming ad set burns through its full allocation. Your scaling campaigns have to generate enough profit to cover all that testing waste. This is the hidden cost of ABO that most guides never mention.

You Trust Your Setup Completely

ABO makes sense when you have confidence in your entire funnel. Your creative process is proven. Your landing pages convert. Your audiences are dialed in.

In this scenario, you know your ads will perform. You just need data on all of them. ABO ensures every concept gets a fair test with equal spend.

Depesh Mandalia notes: "ABOs work well because you can control them far better... If your ABO is performing well, it means you've put the right inputs in - good product, good targeting, good funnel."

You Need to Force Spend Through Everything

Sometimes CBO's optimization works against you. The algorithm might identify an early leader based on CTR or engagement, then starve other ad sets that could have been long-term winners with more data.

If you have 5 creative concepts and need genuine performance data on all 5, ABO is the only way to guarantee it. CBO might spend 90% on one ad set before the others even get a chance.

Barry Hott, a well-known Facebook ads strategist, prefers ABO for creative testing specifically for this reason: "I prefer to do that with manual budgets... The reason I like to control the budget is to get an even read on everything. I'm consciously choosing to perform a little worse [overall] but to have a better understanding of what is working."

You're Prepared for Hands-On Management

ABO requires active management. You need clear rules for:

- When to pause underperformers

- How long to let ads run before judging

- When to increase budget on winners

- How to avoid resetting learning phase with budget changes

If you're not checking campaigns daily and making decisions based on fresh data, ABO will waste money. Set-and-forget doesn't work here.

When CBO Makes More Sense (For Most Advertisers)

Here's an uncomfortable truth: for the majority of advertisers, CBO is the better default choice. Here's why.

CBO's algorithm makes budget allocation decisions faster than any human can. It processes signals across all your ad sets simultaneously and shifts spend toward predicted winners in real-time.

If you don't have time to monitor campaigns daily, CBO handles optimization automatically. It's the "set and check" approach versus ABO's "set and manage" requirement.

You're Scaling Proven Winners

Once you've validated which creatives and audiences work, CBO becomes the efficient choice. You already know what performs. Now you want Meta to allocate a larger budget optimally across your proven winners.

This is where CBO shines. You're not testing. You're maximizing results from known performers.

Your Hit Rate Is Uncertain

If you're not confident that most of your ads will work, CBO provides a safety net. The algorithm will quickly cut spend to losers and concentrate budget on anything showing promise.

As Marin Istvanic notes: if your "hit rate... is very low," running many ABO tests would be "very inefficient spend as not many ads would turn out [winners]."

You Prefer Stability When Scaling

With ABO, increasing an ad set's budget by too much can reset learning phase. Meta recommends keeping budget increases under 20% at a time.

With CBO, you can increase the campaign budget freely. The algorithm distributes the additional spend across ad sets without triggering learning resets. This makes CBO more stable for aggressive scaling.

The Happy Medium: CBO with Minimum Ad Set Spend

Here's the approach that works for most advertisers but rarely gets covered in depth. It combines CBO's efficiency with ABO's testing fairness.

Launch More. Click Less.

Upload hundreds of creatives at once, auto-match thumbnails to videos, and export directly to Meta Ads Manager.

Try Ads Uploader FreeNo credit card required • 7-day free trial

How It Works

- Set up a CBO campaign with multiple ad sets

- Enable minimum daily spend per ad set (e.g., $20-50 each)

- Run for approximately one week

- Remove the minimum spend constraints

- Let Meta optimize freely with the data it's gathered

The minimum spend forces every ad set to get initial budget, similar to ABO. But once you remove it, CBO takes over and allocates based on performance data.

The Critical Step Most People Miss

Remove the minimum spend after approximately one week.

This is crucial. If you keep forcing spend indefinitely, you're essentially running ABO with extra steps. You're paying for CBO but negating its optimization.

After a week, Meta has enough data to make informed allocation decisions. Removing the minimums lets the algorithm do what it does best: concentrate budget on winners.

Why This Works

You get initial testing fairness (every ad set gets minimum spend to gather data) plus long-term optimization (CBO concentrates budget on proven performers).

It's the best of both worlds for advertisers who:

- Want fair creative testing

- Don't have extreme confidence in their hit rate

- Prefer CBO's hands-off management style

- Are willing to check back in a week to remove constraints

Meta itself discourages overusing spend limits. Jon Loomer notes: "Some advertisers use ad set spend limits... in fear Meta will overspend on one ad set... but in most cases, this defeats the whole purpose [of CBO]... If you don't trust the algorithm to distribute budget optimally between ad sets, you should probably just use ad set budgets instead."

Use minimums sparingly and temporarily. If you find yourself constantly constraining CBO, you might actually want ABO.

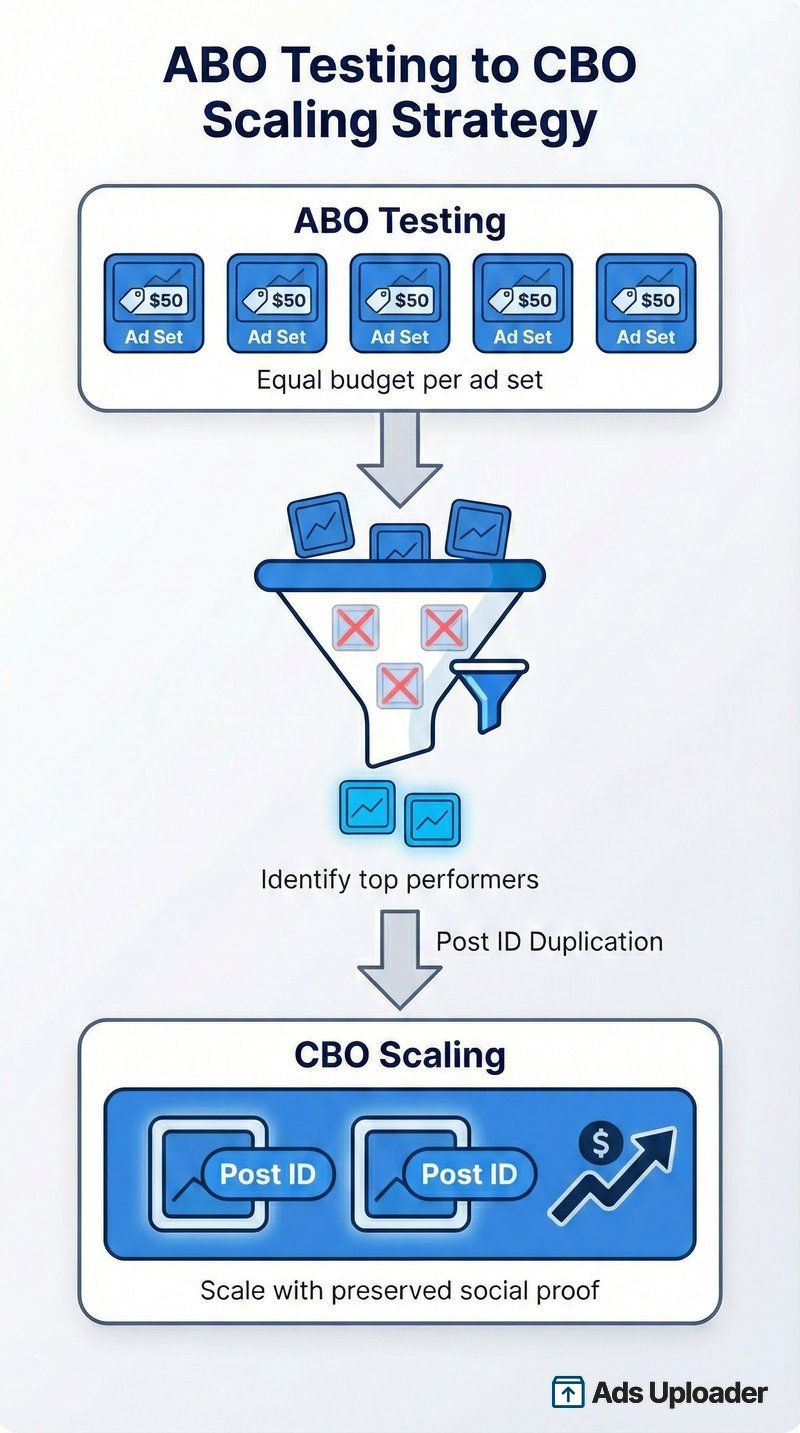

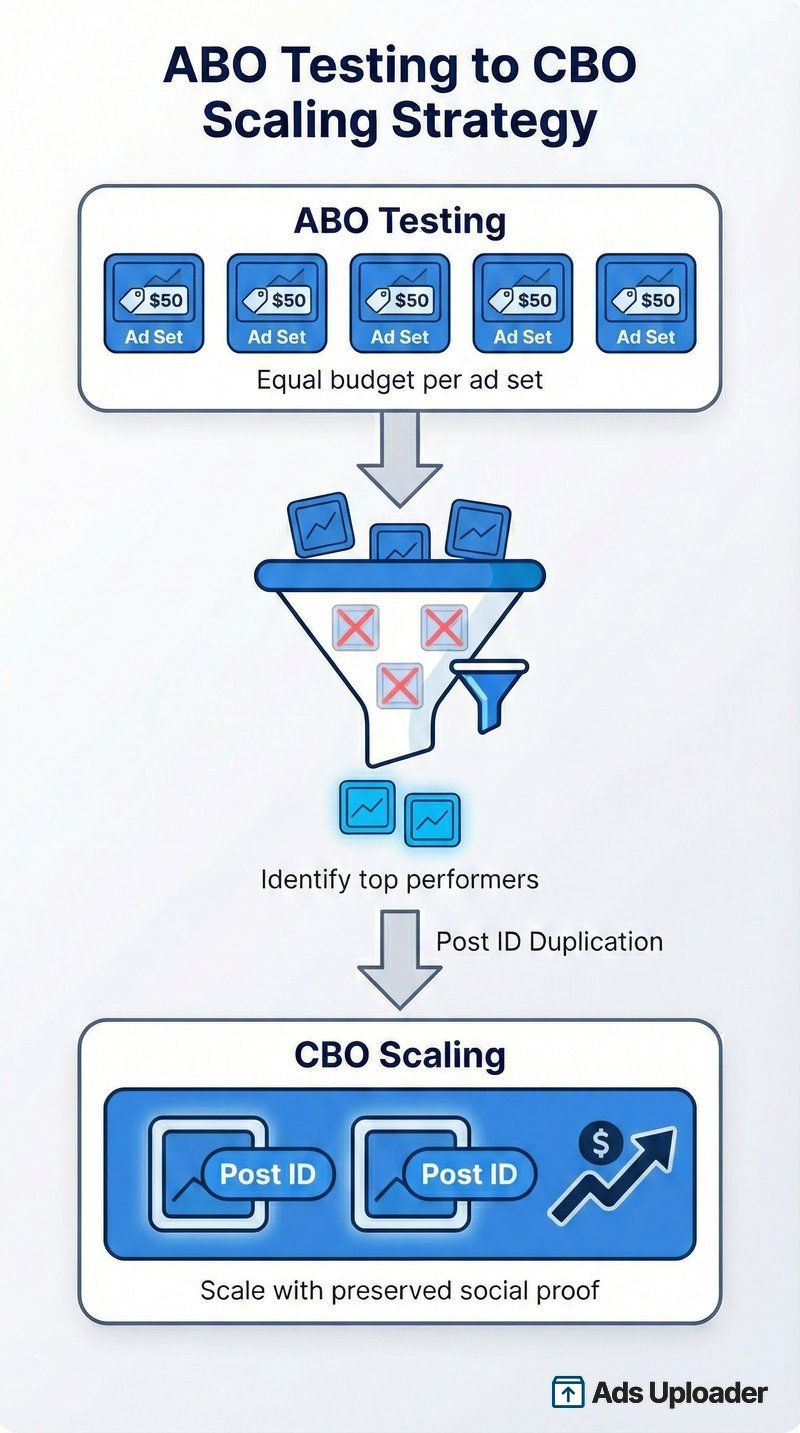

The most sophisticated advertisers don't choose ABO or CBO. They use both strategically in a workflow that maximizes testing clarity and scaling efficiency.

ABO for Testing

Use ABO campaigns for initial creative testing:

- Equal budget per ad set (each creative concept gets fair spend)

- Same targeting across ad sets (isolate creative as the variable)

- Hands-on monitoring to identify winners quickly

- Clear performance rules for what qualifies as a "winner"

The goal isn't efficiency. The goal is data clarity. You want to know definitively which creatives work before scaling.

CBO for Scaling

Once you've identified winners in ABO testing, move them to CBO campaigns for scaling:

- Use cost caps, bid caps, or ROAS targets to control efficiency

- Let Meta allocate budget across proven winners

- Scale campaign budget without triggering learning resets

- Monitor but don't micromanage

The Scaling Workflow

Here's the step-by-step process elite media buyers use:

- Test new creatives in ABO campaign - Equal spend per ad set, same broad targeting, hands-on management

- Identify winners based on your performance metrics (CPA, ROAS, whatever matters for your account)

- Create new CBO campaign with cost cap or bid cap objective for controlled scaling

- Use post ID duplication to move winning ads into the CBO campaign while preserving social proof (likes, comments, shares)

- Scale budget in CBO while continuing to test new creatives in your ABO campaign

This workflow separates testing from scaling. ABO handles the messy, expensive work of finding winners. CBO handles the efficient work of maximizing those winners.

Why Post ID Duplication Matters

When you duplicate an ad normally, you create a new post and lose all engagement. Your winning ad with 500 likes starts at zero in the new campaign.

Post ID duplication preserves that social proof by using the same underlying Facebook post. Tools like Ads Uploader include this in their Duplicator panel, making it easy to scale winners while maintaining accumulated engagement.

Common Mistakes to Avoid

Even experienced advertisers make these errors with ABO and CBO. Here's what to watch for.

Running ABO with a Low Hit Rate

If most of your ads fail, ABO just spreads losses evenly. You're guaranteeing spend on losers that CBO would have cut.

Solution: Be honest about your hit rate. If it's low, use CBO and focus on improving your creative process. Or use the CBO with minimum spend approach to get testing fairness with less waste.

Forcing Spend Indefinitely in CBO

Setting minimum spends and never removing them defeats CBO's purpose. You're paying for algorithmic optimization but preventing it from working.

Solution: Use minimum spends only for initial testing (about one week), then remove them. If you can't bring yourself to remove them, you probably want ABO.

Mixing Strategies Without a System

Randomly switching between ABO and CBO based on gut feeling leads to inconsistent data and wasted budget.

Solution: Define your rules before launching:

- What qualifies as a winner in ABO testing?

- When do you move winners to CBO?

- What bid strategy do you use for scaling?

- When do you pause underperformers?

Having clear rules removes emotion from decisions.

Expecting CBO to Fix Bad Creatives

CBO optimizes delivery. It can't turn losers into winners. If all your creatives are weak, CBO will just pick the least bad one and spend your budget on mediocrity.

Solution: CBO is for scaling good ads, not salvaging bad ones. Focus on creative quality first.

Save Hours on Creative Testing

Stop uploading ads one by one. Bulk process unlimited creatives with automatic media matching and direct API publishing.

Try Ads Uploader FreeNo credit card required • 7-day free trial

Too Many Ad Sets in CBO

If you have 10 ad sets in a CBO campaign with a $50 total budget, each ad set gets too little spend to generate meaningful data. Meta recommends 3-5 ad sets for most CBO campaigns.

Solution: Consolidate. Fewer ad sets with more budget each performs better than many ad sets with fragmented budget.

Making Frequent Changes

Constantly tweaking budgets, pausing/unpausing ad sets, or editing targeting resets learning and prevents optimization.

Solution: Let campaigns run at least a week before making major changes. The algorithm needs stable conditions to learn.

Setting Up Each Strategy (Quick Start)

Setting Up an ABO Campaign

- Create new campaign in Ads Manager

- Toggle OFF "Advantage+ campaign budget" at campaign level

- Create ad sets with individual daily budgets

- Budget recommendation: 2x your target CPA per ad set per day (e.g., $40/day for $20 CPA target)

- Use same targeting across ad sets if testing creatives

- Monitor daily and pause underperformers after sufficient data (usually 3-5 days minimum)

Pro tip: Name your ad sets with the budget included (e.g., "Broad - Creative A - $50/day") for quick reference.

Setting Up CBO with Minimum Spend

- Create new campaign with "Advantage+ campaign budget" toggled ON

- Set campaign daily budget (total across all ad sets)

- Create ad sets

- For each ad set: go to Budget & Schedule > Ad Set Spend Limits

- Set minimum daily spend (e.g., 10-20% of campaign budget per ad set)

- Launch and run for approximately one week

- After one week: return to each ad set and remove minimum spend limits

- Let CBO optimize freely going forward

Budget formula: Campaign daily budget = (Target CPA × 50 conversions) ÷ 7 days. This gives the algorithm enough budget to potentially exit learning phase within a week.

Frequently Asked Questions

Is CBO better than ABO for Facebook ads?

Neither is universally better. CBO is better for scaling proven winners with less management overhead. ABO is better for controlled creative testing when you have a high hit rate. Most advertisers benefit from CBO with minimum spend for the first week, then letting the algorithm optimize. The best approach is using both: ABO for testing, CBO for scaling.

How much budget do I need for CBO?

Budget your campaign so each ad set can potentially generate 50 conversions per week. Formula: (Target CPA × 50) ÷ 7 = minimum daily campaign budget. For example, with a $25 CPA target, you need at least $178/week ($25/day) to give the algorithm enough data to exit learning. With multiple ad sets, multiply accordingly.

Does CBO spend more money than ABO?

CBO doesn't inherently spend more. It spends the same campaign budget but allocates it differently. CBO concentrates spend on predicted winners, which can feel like "overspending" on certain ad sets. But the total spend matches your campaign budget. The difference is efficiency of allocation, not total expenditure.

Can I switch from ABO to CBO mid-campaign?

Technically yes, but it's not recommended. Changing budget structure mid-campaign resets learning and can hurt performance. Better approach: create a new CBO campaign and move winning ads (using post ID duplication to preserve social proof) rather than converting an existing ABO campaign.

What happened to CBO? Why is it called Advantage+ campaign budget now?

Meta added "Advantage+ campaign budget" as an alternative name for Campaign Budget Optimization as part of their broader "Advantage+" product naming convention. The functionality is identical. When you see "Advantage+ campaign budget" toggled on at campaign level, that's CBO.

How do I know if my hit rate is high enough for ABO?

Track your creative testing results over time. If 6-7 out of 10 new ads hit your target metrics, your hit rate is high enough for ABO to be efficient. If only 2-3 out of 10 work, CBO with minimum spend is a better choice. There's no universal threshold, but anything below 50% hit rate makes ABO expensive.

Advantage+ Shopping (now Advantage+ Sales) campaigns use CBO under the hood but add automated audience expansion and creative optimization. They've become popular for e-commerce, with Meta reporting 70% YoY adoption growth. The ABO vs CBO choice doesn't apply to Advantage+ campaigns because budget allocation is fully automated. Many advertisers run Advantage+ alongside traditional ABO/CBO campaigns.

Conclusion

The ABO vs CBO debate isn't about finding the "right" answer. It's about matching your strategy to your situation.

Key takeaways:

- ABO requires high hit rates. If most of your ads don't work, ABO just spreads losses evenly. Be honest about your creative success rate before committing to ABO.

- CBO is the better default for most advertisers. Less management, automatic optimization, stable scaling. Unless you have specific testing needs, CBO with minimum spend is the happy medium.

- The minimum spend trick works. Force initial spend with minimums, then remove after a week. You get testing fairness plus long-term optimization.

- Top advertisers use both. ABO for controlled testing, CBO for efficient scaling. Post ID duplication bridges the two by preserving social proof when moving winners.

- Campaign structure isn't the game-changer. As Andrew Foxwell's team puts it: "Account setups - CBO vs. ABO, consolidation vs. splitting - aren't the game-changers people make them out to be... the real growth comes from creative testing, strong data feedback loops, and consistent execution."

The "best" strategy depends on your hit rate, your available time for management, and whether you're testing or scaling. Not on what some guru says works for their clients.

For teams testing creative at scale, bulk upload tools like Ads Uploader eliminate the manual overhead of launching multiple ad variations. Whether you're setting up ABO test campaigns with 50 creative variations or moving winners into CBO scaling campaigns via post ID duplication, automation handles the tedious work so you can focus on strategy.